These Algorithms Look at X-Rays—and Somehow Detect Your Race

By Tom Simonite,

Wired

| 08. 05. 2021

A study raises new concerns that AI will exacerbate disparities in health care. One issue? The study’s authors aren’t sure what cues are used by the algorithms.

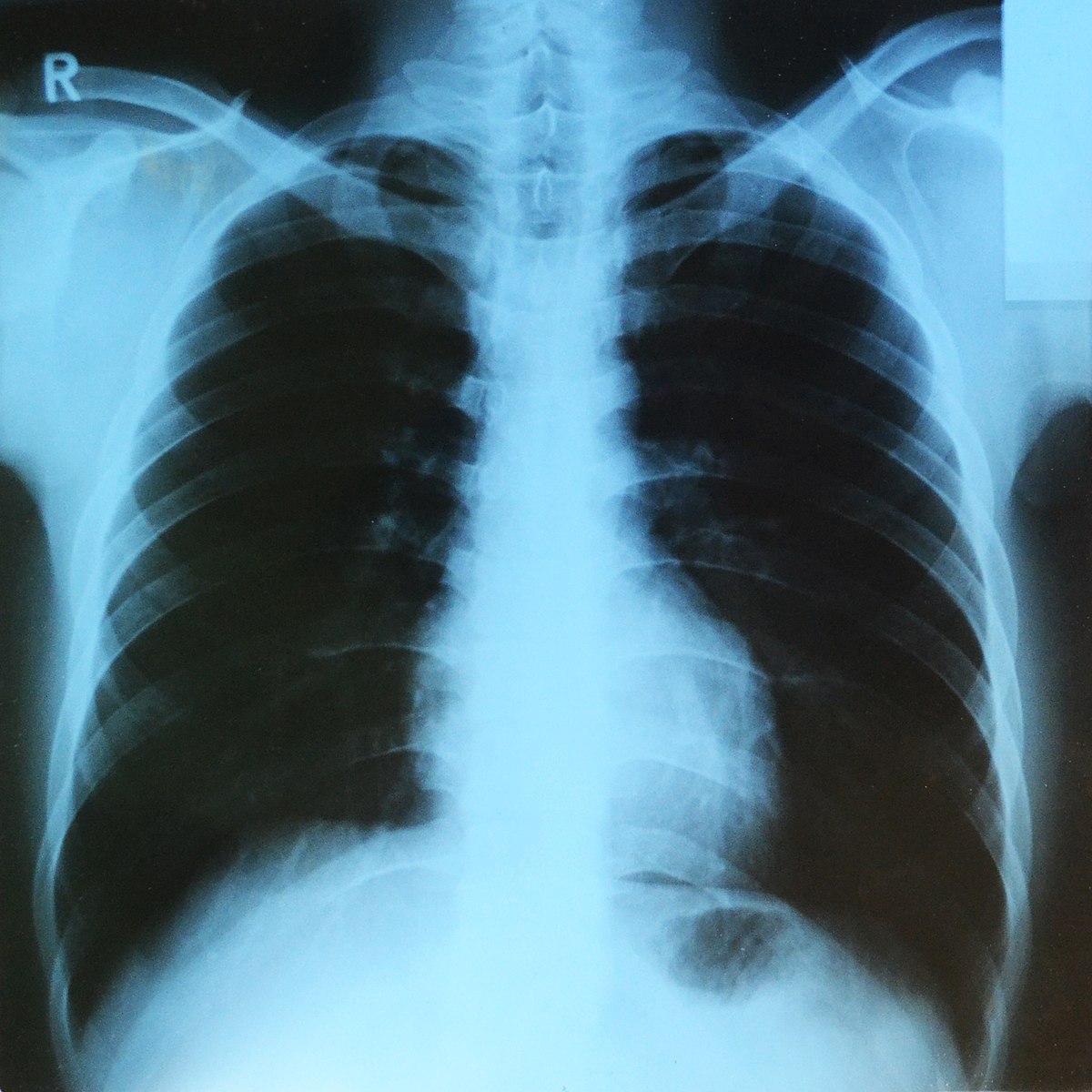

Photo licensed for use by CC BY-SA 4.0 on Wikimedia Commons

Millions of dollars are being spent to develop artificial intelligence software that reads x-rays and other medical scans in hopes it can spot things doctors look for but sometimes miss, such as lung cancers. A new study reports that these algorithms can also see something doctors don’t look for on such scans: a patient’s race.

The study authors and other medical AI experts say the results make it more crucial than ever to check that health algorithms perform fairly on people with different racial identities. Complicating that task: The authors themselves aren’t sure what cues the algorithms they created use to predict a person’s race.

Evidence that algorithms can read race from a person’s medical scans emerged from tests on five types of imagery used in radiology research, including chest and hand x-rays and mammograms. The images included patients who identified as Black, white, and Asian. For each type of scan, the researchers trained algorithms using images labeled with a patient’s self-reported race. Then they challenged the algorithms to predict...

Related Articles

By Julia Métraux, Mother Jones | 02.10.2026

Why was Jeffrey Epstein obsessed with genes? In the latest tranche of Epstein records and emails made available by the Department of Justice, themes of genes, genetics, and IQ—alongside more explicit threads of white supremacy—keep cropping up, often adjacent to Epstein’s...

By Ava Kofman, The New Yorker | 02.09.2026

1. The Surrogates

In the delicate jargon of the fertility industry, a woman who carries a child for someone else is said to be going on a “journey.” Kayla Elliott began hers in February, 2024, not long after she posted...

By Dan Barry and Sonia A. Rao, The New York Times | 01.26.2026

Photo by Gage Skidmore from Peoria, AZ, United States

of America, CC BY-SA 2.0, via Wikimedia Commons

Late last month, a woman posted a photograph on social media of a purple hat she had knitted, while a black-and-white dog...

By Shobita Parthasarathya, Science | 01.22.2026

These are extraordinarily challenging times for university researchers across the United States. After decades of government largess based on the idea that a large and well-financed research ecosystem will produce social and economic progress, there have been huge cuts in...